On This Page

- How My First Week With Twin Pics Actually Went

- The Core Loop, Experienced Rather Than Explained

- What Twin Pics Is Really Measuring

- Pricing and Access: Why the Free Model Matters Here

- Where Twin Pics Is Strong, and Where It Very Clearly Isn’t

- How Twin Pics Fits Into the Broader AI Tool Landscape

- My Personal Ratings (Based on Long-Term Use)

- Final Thoughts: Why Twin Pics Sticks Without Trying To

When I started using Twin Pics AI, I wasn’t looking for another image generator. I already had access to those. What caught my attention instead was the constraint: describe an image in under 100 characters and see how close you get.

That single rule changes how you think. It shifts the focus away from aesthetics and toward language. And once that shift happens, Twin Pics stops feeling like a novelty and starts feeling like a small but surprisingly effective training ground.

Before getting into ratings or features, it helps to explain how it actually feels to use it over time—because that’s where its value (and its limits) become clear.

How My First Week With Twin Pics Actually Went

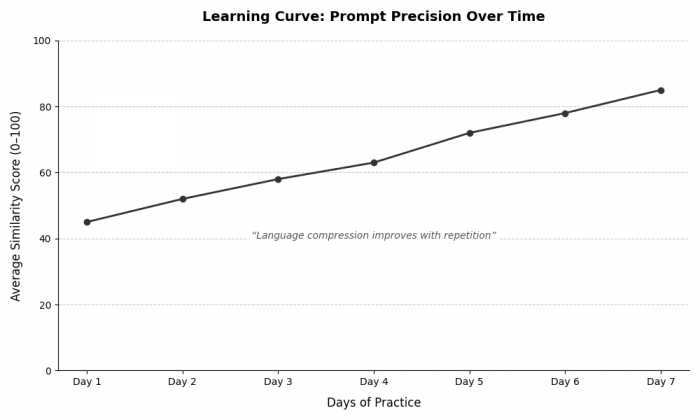

The first day felt almost trivial. You see a reference image, type something obvious, hit generate, get a score. Mine was bad. Not catastrophically bad, but bad enough to make it clear that “describing what you see” and “describing what an AI understands” are not the same thing.

By day three, I stopped describing objects and started describing relationships:

foreground vs background, lighting cues, camera angle, texture, mood.

By day seven, I was counting characters like currency.

That progression matters, because it explains why Twin Pics works better as a habit than as a one-off experiment. And that habit is built around a very tight loop.

The Core Loop, Experienced Rather Than Explained

On paper, the system is simple:

- One reference image every 24 hours

- One prompt (100 characters max)

- One generated result

- One similarity score (0–100)

In practice, it feels more like a feedback instrument than a game. The score isn’t “fun points.” It’s a rough but immediate signal of how your wording landed.

The public leaderboard adds another layer. Seeing high-scoring prompts next to their outputs does something subtle: it demystifies success. You start to notice patterns, what people don’t say as much as what they do.

This naturally leads into a bigger realization: Twin Pics isn’t testing creativity as much as it’s testing compression.

What Twin Pics Is Really Measuring

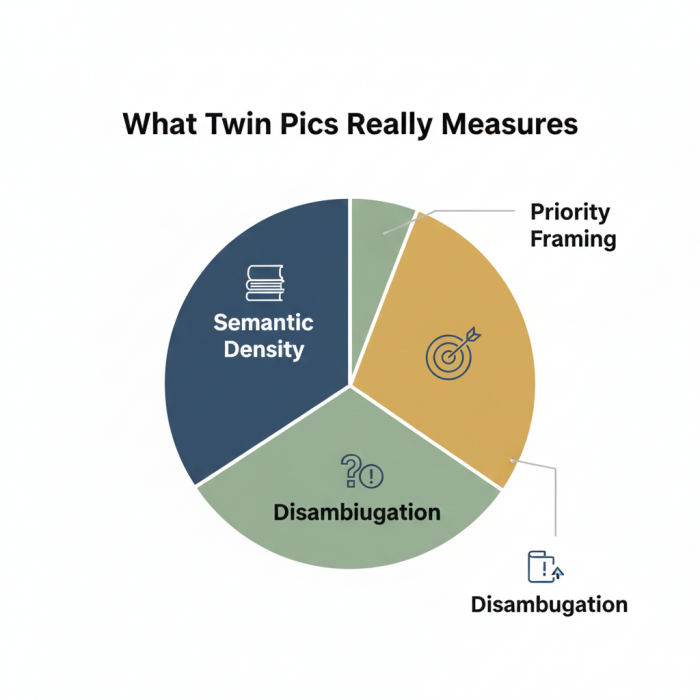

After a few weeks, it became clear that Twin Pics rewards three things consistently:

Semantic density – How much visual information fits into each word

Disambiguation – Removing assumptions humans make automatically

Priority framing – Choosing what not to describe

That’s why the 100-character limit isn’t just a gimmick. It’s the whole point. Without it, the exercise collapses into trial-and-error prompting like any other image tool.

This design choice also explains who seems to get the most value out of it.

Who This Actually Feels Useful For (Based on Use, Not Marketing)

From my own use and from scanning community discussions and reviews, Twin Pics resonates most with:

- Students learning AI concepts for the first time

- Educators who need something concrete to demonstrate “prompt engineering”

- Writers and designers trying to improve visual description skills

- Developers curious about human-AI interpretation gaps

It’s noticeably less useful if your goal is output volume, production assets, or visual polish. And that’s fine, because Twin Pics doesn’t pretend otherwise.

That honesty shows up again when you look at access and pricing.

Pricing and Access: Why the Free Model Matters Here

As of 2025, the core Twin Pics experience is still free:

Daily challenge: free

Scoring engine: free

Leaderboard access: free

There is a separate, more traditional image generator product in the same ecosystem, positioned for paid or enterprise use. But importantly, it’s kept distinct. The game itself isn’t overloaded with upgrade nudges.

That separation matters, because the moment Twin Pics becomes a funnel, the learning value drops. Right now, it still feels like an experiment that’s allowed to exist on its own terms.

Which brings me to the builder behind it.

The Builder’s Fingerprints Are Visible (In a Good Way)

Twin Pics comes from Chris Sevillano (often known as Chris Sev), someone associated with the “build in public” mindset. You can feel that in the product.

It doesn’t feel like something reverse-engineered from a growth deck. It feels like a question someone genuinely wanted to explore: how well can people communicate visually under constraint?

That same ecosystem includes other tools focused on transformation rather than generation, which reinforces the idea that Twin Pics is more about understanding AI than selling AI.

Still, no product earns trust just by intent. It earns it by being honest about trade-offs.

Where Twin Pics Is Strong, and Where It Very Clearly Isn’t

Where it works well (from use and reviews):

- Teaching prompt precision quickly

- Making abstract AI concepts tangible

- Encouraging careful language choices

- Creating a low-pressure daily learning habit

Where it falls short (by design):

- No image refinement or iteration

- No editing controls at all

- Character limit can feel restrictive for complex scenes

- No API or extensibility for developers

I don’t see these as flaws so much as boundaries. Problems arise only when people expect Twin Pics to be something it never claims to be.

Which leads to a more useful framing.

How Twin Pics Fits Into the Broader AI Tool Landscape

Twin Pics isn’t competing with Midjourney, Firefly, or other image generators. It sits before them.

It’s closer to:

- A typing tutor for visual language

- A daily kata for prompt writing

- A sandbox for testing how wording changes meaning

You don’t use it to ship work. You use it to get better at the thinking that leads to better work elsewhere.

That’s why, over time, it starts to feel less like a game and more like an instrument.

My Personal Ratings (Based on Long-Term Use)

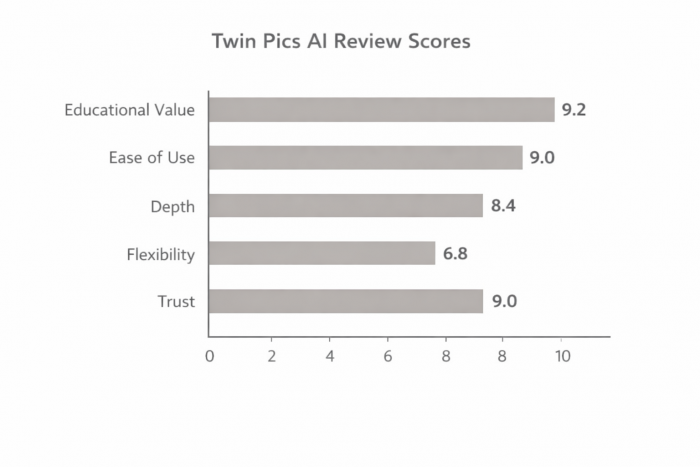

| Aspect | Score (10) |

| Educational value | 9.2 |

| Prompt feedback clarity | 8.8 |

| Ease of use | 9.0 |

| Depth over time | 8.4 |

| Flexibility | 6.8 |

| Non-promotional trust | 9.0 |

| Overall | 8.6 / 10 |

The lower scores aren’t criticisms so much as acknowledgements of scope. Twin Pics is narrow, and intentionally so.

Final Thoughts: Why Twin Pics Sticks Without Trying To

What I appreciate most about Twin Pics AI is restraint.

It doesn’t promise mastery.

It doesn’t disguise randomness.

It doesn’t push productivity narratives.

It simply asks you to try, score, reflect, and try again.

In a space full of tools trying to look indispensable, Twin Pics is comfortable being useful in a small, specific way. And sometimes, that’s exactly what makes a tool worth returning to.

It won’t replace your creative stack.

But it might quietly improve how you use it.

Post Comment

Be the first to post comment!