On This Page

- What Is “AI Slop” and Why Is It a Problem?

- What’s Changing in YouTube’s Policy?

- What Is Still Allowed?

- What’s the Creator Community Saying?

- Who Will Be Most Affected?

- Is This the End of “Faceless” YouTube?

- What About Transparency and Disclosure?

- What Happens After July 15?

- AI Isn’t Going Anywhere — But It’s Getting Rules

- Final Takeaway

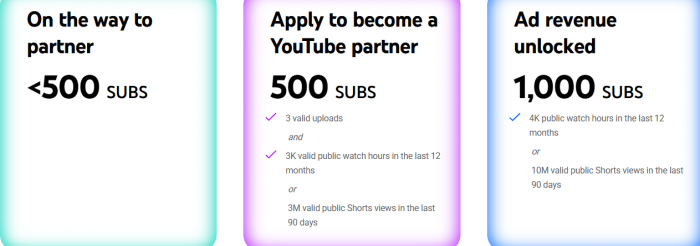

YouTube is drawing a line in the sand. Starting July 15, the platform will enforce stricter monetization policies to crack down on mass-produced, low-effort videos, many of which are driven by AI. The move comes amid growing concern over the rise of what the internet now dubs "AI slop" — formulaic, low-quality content that floods feeds without adding value.

The platform’s updated Partner Program rules aim to protect the integrity of YouTube’s content ecosystem without stifling genuine creators who use automation responsibly.

What Is “AI Slop” and Why Is It a Problem?

The term AI slop refers to mass-generated content that lacks originality — often created using AI tools with minimal human input.

Examples include:

- Voiceover slideshows with generic narration

- Repetitive video formats (e.g., “top 10” countdowns with stock footage)

- Slight rewordings of the same script across dozens of videos

- Thin commentary or purely SEO-driven uploads

These videos often rack up millions of views by gaming the algorithm, but creators and users alike have voiced concerns about the platform becoming saturated with low-effort uploads.

What’s Changing in YouTube’s Policy?

The YouTube Partner Program (YPP) already requires content to be advertiser-friendly, but the new update explicitly targets:

- Mass-produced content with little or no variation

- Templated videos that lack significant originality

- Heavily automated videos made primarily with AI without human transformation

- Repackaged media (e.g., reused footage, voiceovers, or scripts) without commentary or educational context

“We’re not banning AI. We’re just drawing the line at content that doesn’t meet our originality standards,” a YouTube spokesperson clarified in an internal update.

What Is Still Allowed?

YouTube emphasized that using AI tools isn't against the rules—as long as they’re used creatively and responsibly. Content that adds value, demonstrates human involvement, or transforms the source material is still monetizable.

Allowed use cases include:

- Original commentary with AI-edited visuals

- Voiceover content that includes personal insights or storytelling

- AI-assisted scripting with original video editing or animations

- Tutorials and explainers with human-led structure and intention

In short: AI can assist the creative process, but it cannot replace it entirely.

What’s the Creator Community Saying?

The news sparked wide discussion on Reddit, YouTube creator forums, and Discord channels.

- Supportive creators believe this will help level the playing field.

“Finally, some breathing room for original voices,” said one indie YouTuber on /r/PartneredYouTube. - Skeptics worry enforcement could be vague.

“It’s not clear where the ‘AI-helpful’ vs ‘AI-sloppy’ line is,” one user posted on /r/NewTubers. - Clarifiers noted this is an enforcement update, not a new rule.

“YouTube isn’t banning AI — just applying monetization filters more aggressively.”

Who Will Be Most Affected?

The hardest hit will be:

- Automation-heavy channels pushing 20+ videos per day using templates

- Content farms focused on trending topics with recycled assets

- Dropship and affiliate marketers using faceless videos to push SEO content

- Niche explainer channels that rely solely on TTS voiceovers and stock B-roll

Some creators may see sudden demonetization notices or be asked to appeal if their content is flagged.

Is This the End of “Faceless” YouTube?

Not entirely — but it’s definitely a shift.

Faceless YouTube content (videos without the creator appearing on screen) isn’t being banned. But faceless automation without originality is on its way out.

Creators can still thrive without showing their face, but they'll need to offer:

- Unique scripting

- Fresh editing styles

- Strong research or insights

- Value-driven narration

What About Transparency and Disclosure?

Another important theme in the policy update: disclosure.

If your content includes synthetic voices, AI-generated avatars, or other potentially misleading elements, YouTube expects you to disclose this clearly. Undisclosed use of deepfakes, voice cloning, or misleading AI could result in broader enforcement actions — not just demonetization, but potential video takedowns or account warnings.

What Happens After July 15?

- YouTube will begin reviewing monetized videos under the updated policy

- Creators may receive warnings or demonetization flags

- YouTube’s algorithm may also downrank repetitive or templated uploads

- Channels flagged for repetitive slop may need to reapply for YPP status

- Enforcement will be gradual, but the intent is clear: reward quality over quantity.

AI Isn’t Going Anywhere — But It’s Getting Rules

This isn’t YouTube turning its back on AI.

In fact, YouTube itself has experimented with AI tools like:

- Dream Screen for Shorts

- AI dubbing for multilingual content

- AI-powered editing in YouTube Studio

What’s happening is a value reset. Tools are fine. Spam is not.

Final Takeaway

If you’re a creator using AI to support, enhance, or speed up production — you’re likely safe. But if you’re automating for automation’s sake, expect trouble.

This update is YouTube’s way of saying: Be original or be invisible.

Want to stay monetized? Create videos with AI, not because of it.

Post Comment

Be the first to post comment!