On This Page

- Quick Verdict: Writeless AI at a Glance

- I. Feature Analysis: The Academic Writing Specialist

- 1.1. Core Features for Scholarly Work

- 1.2. The “Mimics Your Style” Claim

- II. Performance Audit: Citations, Plagiarism, and AI Detection

- 2.1. Citation Reliability and Scholarly Sources

- 2.2. Plagiarism-Free vs. Content Quality

- 2.3. The Undetectability Test: User and Expert Consensus

- 2.4. User Review Analysis: Real-World Performance Feedback

- III. Pricing and Target Audience

- 3.1. Pricing Structure and Annual Cost

- 3.2. Target Audience Fit: From Undergraduate to PhD

- IV. Conclusion: Academic Integrity vs. Automation

The AI writing assistant sector continues to scale at an aggressive pace, driven by rising academic and technical content demands across universities and research institutions. Tools positioned for scholarly use face growing scrutiny, especially those promising real citations, originality, and AI detection immunity. Writeless AI sits directly in this high-stakes corner of the market, branding itself as a research-focused writing engine capable of producing academic papers that claim to be accurate, plagiarism-free, and undetectable.

This review breaks down those claims and evaluates how Writeless actually performs in real academic scenarios.

Quick Verdict: Writeless AI at a Glance

| Claim | Factual Assessment in 2026 | Score (Out of 5) |

|---|---|---|

| Real Academic Citations | Delivers usable citations for common topics but still requires manual source verification. | 4/5 |

| 100% Plagiarism-Free | Produces original text but not immune to similarity or structural overlap. User checks are essential. | 3/5 |

| Undetectable by AI | Results vary significantly. Often flagged without substantial human editing. | 2/5 |

| Pricing | Low annual cost and strong value for heavy users. | 5/5 |

| Best Use Case | Fast drafts, outlines, and formatted academic structures. | N/A |

I. Feature Analysis: The Academic Writing Specialist

Writeless AI positions itself as a purpose-built tool for academic and research writing rather than a general conversational model. Its design centers around structure, citations, and long-form output.

1.1. Core Features for Scholarly Work

Writeless bundles together several functions that directly support student workflows.

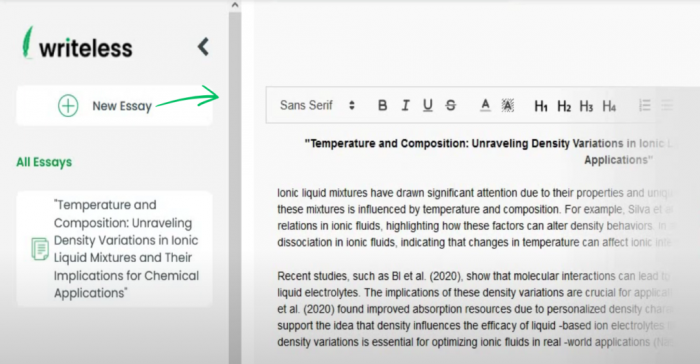

Long-Form Academic Generation

The system can produce papers up to around 20,000 words, which appeals to users handling theses, dissertations, and extended essays. This aligns with broader market demand, where academic and technical writing account for a major share of AI writing tool usage.

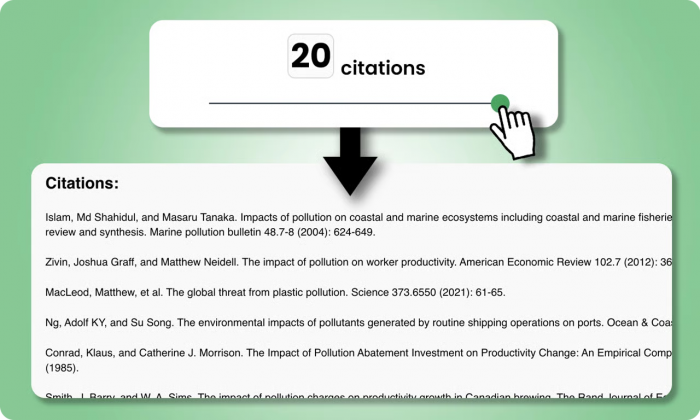

Automated Citation Builder

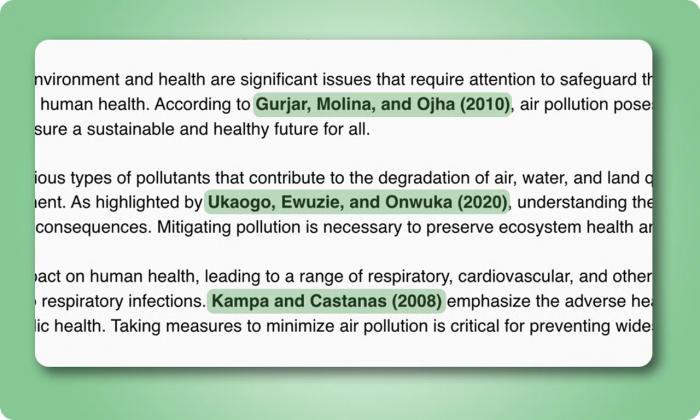

One of its strongest capabilities is its multi-format citation engine. It generates in-text citations and reference lists across APA, MLA, Chicago, Harvard, and Vancouver. Many users choose Writeless specifically to avoid the time-consuming formatting work required by universities.

Claimed Research Integration

The tool states that it “pulls from real scholarly databases” to reduce hallucination. While it does produce citations that look authentic, the reliability varies depending on topic complexity. Users still need to confirm the source exists, is relevant, and is quoted accurately.

1.2. The “Mimics Your Style” Claim

Writeless promotes a feature that adapts to the user’s writing voice.

In practice, the results are mixed. Some users report more natural sentence flow compared to standard LLMs. Others mention that the writing occasionally slips into generic patterns, awkward transitions, or factual gaps that require manual cleanup. Style mimicry helps reduce obvious AI signatures, but it is not consistent enough to guarantee undetectability.

II. Performance Audit: Citations, Plagiarism, and AI Detection

This is the area where Writeless makes its boldest promises and where scrutiny matters most. Academic integrity depends heavily on accuracy, originality, and transparency.

2.1. Citation Reliability and Scholarly Sources

Writeless does a better job than many general AI tools when it comes to producing sources that resemble real academic references. It often cites actual papers, particularly for well-established topics. The challenge is precision. Details such as page numbers, publication years, and author order are sometimes off, and on niche subjects the tool may still generate fabricated or mismatched sources.

Experts continue to stress that AI cannot be relied on as a standalone research tool. Even when a citation looks real, its relevance or accuracy needs verification. The time saved in formatting is significant, but the responsibility for fact-checking stays with the user.

2.2. Plagiarism-Free vs. Content Quality

Writeless claims its writing is entirely plagiarism-free because it produces text from scratch. Technically, this is true. It does not copy sentences verbatim from identifiable sources. But originality in academia is not limited to phrasing. It also includes argument structure, insights, interpretation, and critical thinking.

Many students misunderstand this distinction. Original text does not equal original ideas.

Writeless can produce solid overviews and summaries, but when pushed into specialized or postgraduate-level content, the writing becomes shallow or repetitive. It lacks the intellectual rigor expected in higher academic tiers.

2.3. The Undetectability Test: User and Expert Consensus

The most controversial claim is that Writeless outputs are “completely undetectable.”

This does not hold up in practice. Raw, unedited text is often flagged by multiple AI detectors. Some users manage to lower detection rates through revisions, rewriting, or mixing their own text with Writeless output. Even then, there is no reliable way to guarantee a score that avoids academic scrutiny.

University policies reinforce a critical point: AI detection is only one factor in misconduct investigations, but a high detection score usually triggers a review. Depending on the institution, students may be asked to provide drafts, explain their research process, or demonstrate authorship.

Relying on any tool’s promise of undetectability is a high-risk approach that can result in academic penalties.

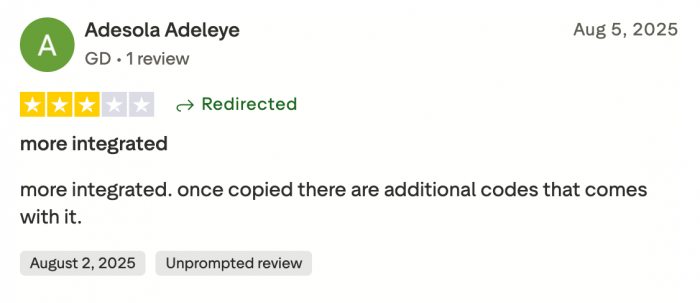

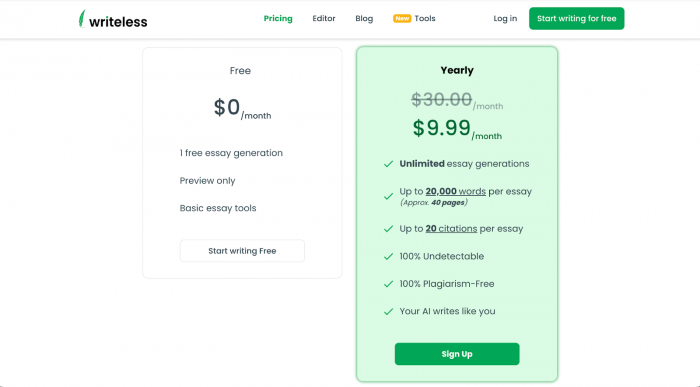

2.4. User Review Analysis: Real-World Performance Feedback

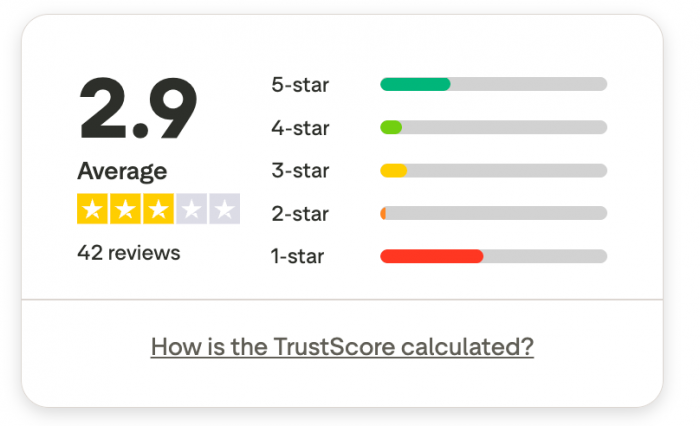

User feedback offers a clearer picture of how Writeless AI performs outside controlled testing, and the reviews paint a mixed but revealing landscape. With a TrustScore hovering around 3 out of 5 from 42 reviews, the platform sits in the middle of the spectrum. The split between highly satisfied users and deeply frustrated ones is unusually sharp, and that polarity is useful for understanding the tool’s true strengths and weaknesses.

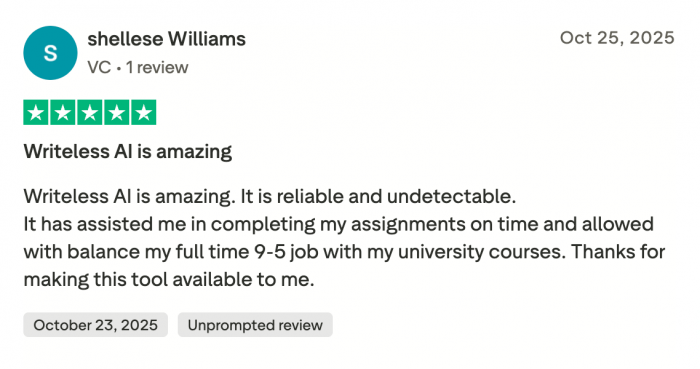

Positive Experiences: Reliability for Basic Academic Work

A consistent theme among five-star reviews is that Writeless helps users manage time pressures and handle repetitive academic tasks. Several students describe it as fast, accurate for common topics, and useful for structuring essays or generating references.

A portion of reviewers also emphasize that it supports their workloads when balancing full-time jobs or multiple classes. For these users, the value lies in efficiency and convenience rather than advanced research capabilities.

One recurring praise point is ease of use, with some noting that the interface is straightforward and that the tool feels intuitive for everyday writing.

Moderate Responses: Helpful but With Limitations

Three-star reviewers generally agree that Writeless delivers volume, but not depth. The most common critique in this group is that the tool generates large blocks of text with minimal formatting control, leaving users to manually adjust structure, headings, or bullet points. Some also point out that the generated content requires filtering or rewriting to reach academic expectations.

These users tend to view Writeless as a supportive tool rather than a reliable final solution.

Negative Feedback: Quality Issues, Technical Failures, and Billing Complaints

The most critical reviews focus on three areas.

1. Output Quality

Several users describe the writing as “word salad,” “nonsensical,” or “below undergraduate level.” Complaints highlight awkward phrasing, incorrect word use, and sentences that fail basic academic scrutiny. A few reviewers provide direct examples that reinforce concerns about internal coherence and grammar.

2. Detectability Problems

Multiple one-star reviews mention that Writeless outputs were flagged by AI detection systems — in some cases scoring as high as 64% AI-generated. These firsthand accounts contradict the product’s undetectability claim and align with the inconsistency highlighted earlier in the performance audit.

3. Subscription and Access Issues

Another strong pattern involves billing frustrations and account access problems. A number of reviewers reported being charged while unable to log in, trouble canceling subscriptions, delayed responses from support, or paying for a service they could not use. The lack of immediate customer assistance appears to be a serious pain point.

Overall Review Landscape

The reviews divide into two clear camps: users who rely on Writeless for quick, simple, structured drafts, and users who find the tools insufficient for deeper academic work or who run into technical issues.

What this really means is that Writeless performs well for foundational tasks but struggles when assignments demand high-level reasoning, stability, or advanced customer support.

III. Pricing and Target Audience

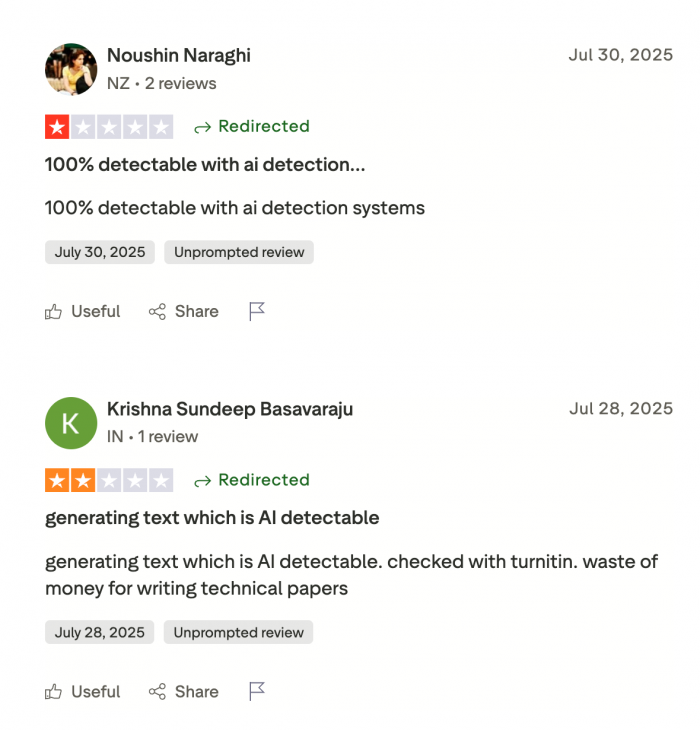

3.1. Pricing Structure and Annual Cost

Writeless keeps its pricing simple. The free plan gives users a single sample generation meant solely for testing. The paid tier, billed annually at a low monthly rate, unlocks unlimited text generation and the full word-count capacity.

At roughly the cost of a single textbook per year, it offers strong value for students who write frequently. Compared to many LLMs that charge usage-based fees, Writeless remains one of the more accessible academic-focused tools.

3.2. Target Audience Fit: From Undergraduate to PhD

Writeless is most effective for:

- Students writing essays, reports, summaries, or structured academic assignments.

- Those who need clean formatting, clear structure, and automated bibliography generation.

It becomes far less suitable when:

- The work demands original research ideas.

- The topic requires deep domain expertise.

- The assignment requires data interpretation or methodology.

Doctoral candidates, research professionals, and scholars usually prefer tools that support literature discovery or data analysis rather than automated content generation.

IV. Conclusion: Academic Integrity vs. Automation

Writeless AI is a polished academic writing assistant that excels in structure, formatting, and efficiency. It takes much of the logistical burden off the writing process by automating bibliography creation and handling long-form outputs with ease. For students juggling multiple assignments, it can be a valuable starting point that helps break through writer’s block or organize ideas.

Its shortcomings come from the claims that matter most in academia. The citations are not consistently reliable. The writing is not inherently research-driven. And the “undetectable” promise does not withstand real testing.

When used responsibly, Writeless can support productivity. When used as a replacement for genuine academic effort, it becomes risky. The most accurate way to describe the tool is this: it produces a strong first draft, not a finished submission.

Post Comment

Be the first to post comment!