The music industry is fighting back against AI-generated deepfakes with lawsuits, platform takedowns, and policy reforms. Learn how Sony Music, artists, and lawmakers are pushing to protect creative rights in the age of generative AI.

Deepfake Music: A Growing Threat to Artists’ Identity

The music industry is facing a rising wave of AI-generated deepfakes—songs created to sound like real artists but without their consent. These aren’t just amateur experiments anymore.

Some of these tracks are so realistic that fans often can’t tell the difference. According to Music Business Worldwide, Sony Music alone has already issued takedown notices for over 75,000 such deepfakes.

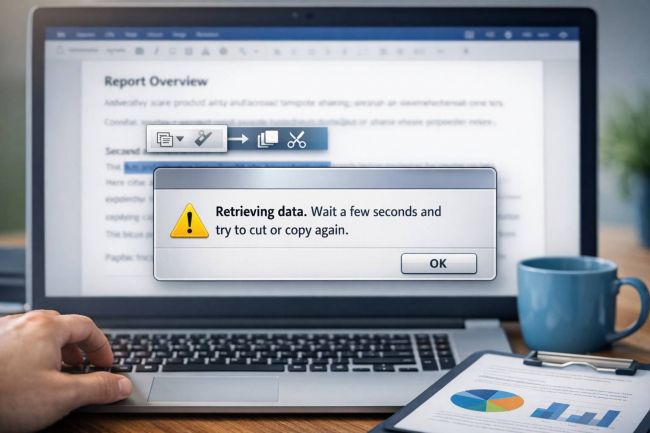

Yet, despite these efforts, AI content continues to appear across major platforms like YouTube, Spotify, and TikTok. This leads us to the next challenge: identifying and removing AI-generated music before it goes viral.

Detecting AI-Generated Songs Isn’t Enough

Pindrop, an information security firm, claims that AI-generated music carries “telltale signs” that can be detected through audio analysis. However, as Tech Xplore points out, detection alone doesn’t stop the distribution. These tracks continue to flood online platforms faster than they can be flagged or removed.

While tech companies work to improve detection tools, they’re still playing catch-up against increasingly sophisticated AI models trained on vast datasets of copyrighted music.

Major Lawsuits Put AI Startups on Notice

Record labels are now taking the legal route. Major names like Sony Music and Universal Music Group have filed lawsuits against AI startups such as Suno and Udio, accusing them of copyright infringement. These companies are alleged to have trained their AI models on thousands of copyrighted songs without permission or licensing agreements.

This legal strategy marks a turning point—music labels are no longer just reacting. They are proactively challenging the foundations of how these AI models are trained.

Amid legal battles, there's growing concern about proposed copyright reforms, especially in the UK. The government is considering changes that would allow AI companies to use copyrighted material for training unless creators opt out. Sony Music has strongly criticized these reforms, claiming they favor AI development over creative rights.

This could create a loophole for developers to exploit artists’ work legally—something the industry wants to avoid at all costs.

Artists Speak Out Against Unauthorized AI Use

It’s not just companies fighting back—individual artists are also speaking up. Celine Dion recently voiced her frustration over AI-generated tracks falsely using her voice and image. She emphasized that these songs, circulating widely online, do not reflect her artistry or consent.

This personal outcry adds a moral layer to the debate: beyond copyrights, it’s about respecting human identity and creative ownership.

AI’s Musical Power Is Growing—And So Are Its Challenges

While the industry battles AI misuse, the tech itself is evolving. Amazon's Alexa is already working with AI tools to let users create their own songs at home, which has drawn criticism from major music bodies. The line between creative freedom and artistic theft is becoming blurrier.

With AI models growing smarter and more accessible, the music industry must rethink its protection strategies—faster and smarter.

What’s Next? A Crossroad Between Tech and Creativity

The struggle between the music industry and generative AI isn’t just about takedowns or courtrooms. It’s about the future of art, trust, and identity in a digital world. While lawsuits and regulations are part of the solution, long-term success may rely on innovation—developing new tools, laws, and ethical frameworks that evolve with the technology.

As AI continues to reshape music creation, the industry must keep asking: How do we protect human creativity without stifling progress?