On This Page

- What Is Quantum Computing and Why Does It Matter?

- Key Developments in Quantum Computing

- What Can Quantum Computers Actually Do?

- Timeline Debate: Is Quantum Usable in 5 Years or 20?

- Barriers Still Blocking Quantum’s Full Potential

- Who’s Leading the Quantum Computing Race?

- Post-Quantum Cryptography: The Urgent Use Case

- Quantum Cloud Computing: Who Offers It?

- FAQs About Quantum Computing

- Final Thoughts: Quantum Is Real, But Still Early

Quantum computing is evolving rapidly, but the technology remains in a transitional phase, promising breakthroughs but still far from mass adoption. Major companies like Google, Microsoft, and Amazon are unveiling powerful chips and error correction methods, while governments and industries invest billions into development. But when will quantum computing finally become usable for everyday applications?

This article explores the current state of quantum computing, real-world use cases, major challenges, and what to expect in the next 5 to 10 years.

What Is Quantum Computing and Why Does It Matter?

Quantum computing is a new form of computation that uses quantum bits (qubits) instead of classical binary bits. Unlike traditional bits (0 or 1), qubits can exist in multiple states at once (superposition) and influence each other through entanglement. This enables quantum computers to solve certain problems exponentially faster than classical computers.

Why is quantum computing important?

- It can potentially break modern encryption algorithms (posing both opportunities and risks).

- It offers speed advantages for drug discovery, material science, climate modeling, and more.

- It may revolutionize financial modeling, logistics, and AI optimization.

Key Developments in Quantum Computing

1. Google’s Willow Chip: 105 Qubits

Google announced its Willow superconducting chip, which achieved below-threshold error correction. In benchmarking, Willow completed a complex task in under five minutes—a task that would take a classical computer 10 septillion years.

2. Amazon’s Ocelot Chip

Amazon Web Services introduced Ocelot, a chip using bosonic “cat” qubits. This architecture promises 90% improvements in error resilience, a critical step toward scalable quantum hardware.

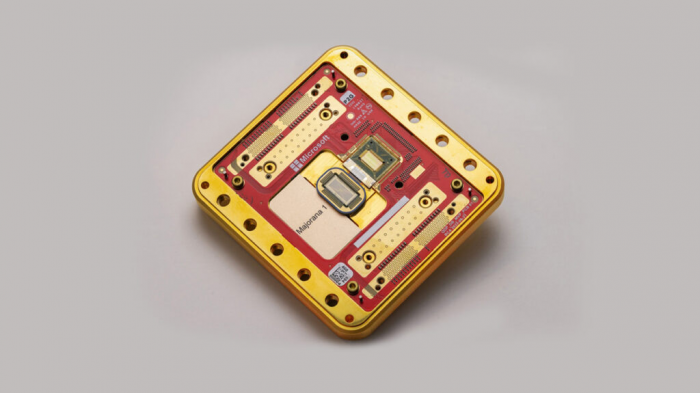

3. Microsoft’s Majorana Qubits

Microsoft unveiled progress on Majorana-based topological qubits, designed to reduce hardware noise and improve fault tolerance. These qubits aim to reduce the number of physical qubits needed for one logical qubit.

What Can Quantum Computers Actually Do?

While full-scale commercial use is still years away, quantum computing is showing potential in the following areas:

- Pharmaceuticals: Simulating molecular structures to accelerate drug development.

- Energy: Modeling battery materials and chemical reactions for clean energy.

- Finance: Enhancing risk modeling, fraud detection, and portfolio optimization.

- Supply Chains: Improving logistics routing and traffic simulation (e.g., Volkswagen’s Beijing taxi test).

- Cybersecurity: Preparing for post-quantum encryption standards to defend against quantum attacks.

Timeline Debate: Is Quantum Usable in 5 Years or 20?

Industry experts remain divided:

- Google executives say real-world applications in materials science and chemistry could arrive within five years.

- NVIDIA’s Jensen Huang and others remain cautious, suggesting 20 years may be more realistic due to hardware limitations.

The debate underscores the uncertainty between innovation and industrial scalability.

Barriers Still Blocking Quantum’s Full Potential

Despite rapid advances, several challenges remain unresolved:

- Decoherence: Qubits lose their quantum state in microseconds, requiring extreme cooling and stabilization.

- Error Correction: Today’s devices need over 1,000 physical qubits to make a single error-free logical qubit.

- Hardware Complexity: Quantum processors must operate in cryogenic environments, making commercial use impractical for now.

- Cost and Accessibility: Building and maintaining quantum systems is expensive and limited to top institutions.

Who’s Leading the Quantum Computing Race?

| Company | Technology Focus | Latest Milestone |

| Superconducting Qubits | Willow chip with 105 qubits | |

| Amazon | Bosonic Qubits (Cat Qubits) | Ocelot chip for error reduction |

| Microsoft | Topological Qubits | Majorana-based prototype |

| IBM | Modular Scaling & Cloud Access | Roadmap to 1000+ qubits |

| IonQ | Trapped Ion Qubits | Modular, high-fidelity systems |

These companies are investing heavily in quantum hardware, cloud integration, and algorithm development to bring quantum computing to enterprises.

Post-Quantum Cryptography: The Urgent Use Case

One immediate driver of adoption is post-quantum cryptography. Quantum computers could break RSA and ECC encryption, forcing organizations to shift to quantum-safe algorithms.

The U.S. National Institute of Standards and Technology (NIST) is finalizing quantum-resistant cryptographic standards, with widespread deployment expected by 2027.

Governments and tech giants are building quantum-secure infrastructure to prepare for future threats.

Quantum Cloud Computing: Who Offers It?

Several platforms offer remote access to quantum hardware via the cloud:

- Amazon Braket

- Microsoft Azure Quantum

- IBM Quantum

- Rigetti and IonQ APIs

These services let developers run quantum algorithms on small-scale systems to experiment with Shor’s, Grover’s, and variational quantum algorithms.

FAQs About Quantum Computing

What is the current state of quantum computing?

Quantum systems are transitioning from lab research to experimental deployment. Major firms have crossed the 100-qubit milestone, but real-world applications are still 5–20 years away.

When will quantum computing be commercially usable?

Some experts forecast limited real-world use by 2030, especially in materials science and finance. Fully scalable quantum computing may take two decades.

Who is leading in quantum computing?

Companies like Google, Amazon, Microsoft, IBM, and IonQ are at the forefront, each taking different approaches in qubit design and error correction.

Why is quantum computing important?

It has the potential to solve problems classical computers can’t, like simulating molecules, optimizing large systems, and breaking conventional cryptography.

Final Thoughts: Quantum Is Real, But Still Early

In 2025, quantum computing is not a buzzword—it’s a serious scientific endeavor with major breakthroughs in hardware, algorithms, and accessibility. But commercial adoption is still constrained by error rates, physical limitations, and engineering complexity.

The next 5–10 years will be less about flashy benchmarks and more about gradual deployment, starting in sectors like biotech, energy, and cryptography. For now, quantum computing remains a frontier technology—one inching toward impact, not ubiquity.

Post Comment

Be the first to post comment!