The Great AI Tug-of-War

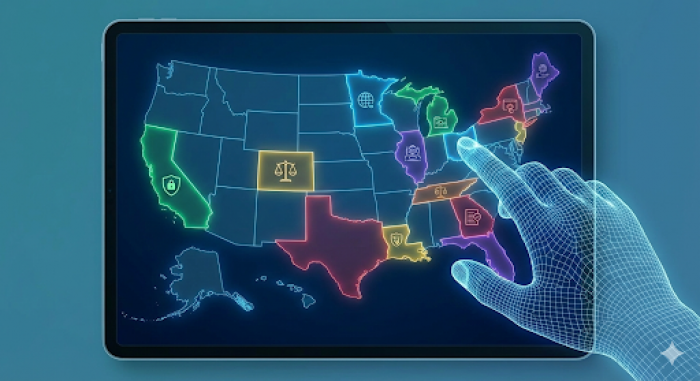

For months, there has been a growing tension between Washington, D.C., and state capitals across the country. Many states, like California and Colorado, have been proactive, passing their own laws to ensure AI is used safely and fairly. They have created rules about things like data privacy and preventing discrimination by AI programs.

However, Trump administration has been pushing for a single, national standard. The argument is that a “patchwork” of different laws in each state would be too confusing and burdensome for American companies, potentially slowing down innovation and putting the U.S. at a disadvantage against competitors like China.

The Initial Plan: Block the States

Until recently, all signs pointed to a showdown. The administration was reportedly drafting an executive order designed to override or preempt these state laws. The plan was to use federal power to stop states from enforcing their own AI rules, effectively wiping the slate clean in favor of a national policy that was yet to be fully defined.

This approach was cheered by many big tech companies but criticized by consumer protection groups and state leaders who felt their citizens needed immediate protection.

A Surprising Pivot?

Now, new reports suggest a change of heart. It appears the administration might be reconsidering its aggressive stance. Instead of an outright fight, there are indications they may be willing to let some state regulations stand, at least for now.

This potential pivot could be due to a few reasons. For one, fighting a legal battle against 50 different states would be incredibly complicated and time-consuming. There's also the political reality that many of these state laws are popular with voters who are concerned about the rapid growth of AI.

Instead of a complete blockade, we might see a more complex scenario emerge. The federal government could focus on creating broad national standards for things like national security and international competition, while leaving room for states to address specific local concerns like consumer protection and data privacy.

What This Means for You

So, what does this all mean for the average person? It means that the rules governing the AI tools you use, from chatbots to recommendation algorithms, might continue to vary depending on where you live. If you are in California, you might have different data privacy rights than someone in Texas.

This situation is still fluid, and the final policy has not been set in stone. But for now, it seems the “patchwork” of state AI laws is here to stay, at least for the immediate future.

We will continue to monitor this story and bring you updates as they happen.

Post Comment

Be the first to post comment!