On This Page

- Under the Hood: How Genmo’s Architecture Works

- Genmo Replay vs Mochi: Which Engine Should You Use?

- Key Technical Features Worth Knowing

- Video Quality: What You Can Expect

- Tips for Writing Better Prompts in Genmo AI

- How to Generate AI Videos with Genmo (Step-by-Step Tutorial)

- Technical Limitations & Bugs

- Community Insight: Reddit & GitHub Feedback

- Genmo AI Pricing: What You Get

- Genmo AI vs Kaiber vs Runway ML: Which AI Video Tool Wins?

- Who Should NOT Use Genmo?

- Who Genmo Is Built For

- Genmo AI Review: Pros, Cons, and User Feedback

- Final Word

Genmo AI isn’t another plug-and-play video toy—it’s an open-source experiment in generative media that’s already turning heads. Built on the powerful Mochi 1 model and driven by the Asymmetric Diffusion Transformer (AsymmDiT) architecture, Genmo delivers surprisingly fluid AI-generated videos from text or image prompts.

Let’s dive deep into how it works, what it does differently, and whether it’s the right fit for your creative or research projects.

Under the Hood: How Genmo’s Architecture Works

At the core of Genmo’s realism is AsymmDiT—a new approach that focuses on asymmetric temporal coherence. Unlike models that treat every frame equally, Genmo gives more predictive weight to frames further along the timeline, enabling smooth 30fps animations with better object stability.

Paired with Mochi 1, an open-source video model released via GitHub, this stack allows full transparency and collaboration. You can inspect the weights, experiment with the model, or even fine-tune it for your own use case.

Genmo Replay vs Mochi: Which Engine Should You Use?

Genmo offers two generation modes, each with its own advantages:

| Mode | Speed | Quality | Ideal For |

| Replay | Fast | Moderate | Quick demos, idea testing |

| Mochi | Slower | High | Final videos, detailed animation |

Most creators start with Replay and graduate to Mochi as their prompt precision improves.

Key Technical Features Worth Knowing

- Text-to-Video: Describe a scene, get a 4–16 second video with camera motion and lighting effects.

- Image-to-Video with Brush Control: Upload an image and animate specific elements using brush strokes.

- Prompt Parameterization: Control duration, aspect ratio (16:9, 9:16, 1:1), zoom level, and motion type.

- Multiple Style Modes: Choose from cinematic, abstract, or stylized rendering paths.

- Discord & GitHub Integration: Collaborate, report bugs, or fork the model from the active open-source community.

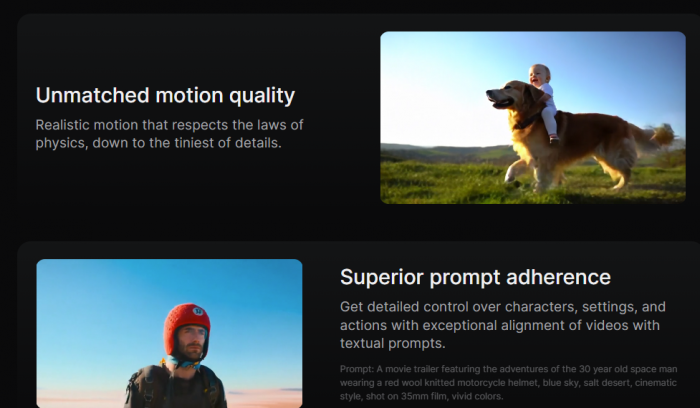

Video Quality: What You Can Expect

- 30 FPS video (Mochi mode)

- Smooth panning and transitions

- Vivid colors and stylized filters

- Short clips (4–16 seconds)

- Occasional distortions on image inputs or over-stylized prompts

Tips for Writing Better Prompts in Genmo AI

Genmo’s performance is deeply tied to how well you write your prompts.

Here are some reliable structures that work:

- Scene + Action + Style

“A glowing city at dusk with neon cars flying, cyberpunk style” - Subject + Mood + Camera Movement

“A medieval knight walking through fog, cinematic slow zoom” - Artistic Filter + Environment + Lighting

“Van Gogh painting of a busy Paris street at night, top-down pan”

Tips:

- Avoid overly short prompts.

- Include lighting, weather, movement, and framing if possible.

- Try rephrasing if the results look distorted.

How to Generate AI Videos with Genmo (Step-by-Step Tutorial)

Creating a video on Genmo is straightforward.

Here's how to get started:

- Visit the playground at genmo.ai/play and sign in.

- Select mode: Choose between text-to-video or image-to-video.

- Enter your prompt: Add details like setting, motion, lighting, and mood.

- Adjust settings: Pick aspect ratio, duration, and motion intensity.

- Preview your video before generating.

- Click Generate: After rendering, download or share your creation.

Bonus: If you use the brush tool in image mode, you can animate specific regions of a static image.

Technical Limitations & Bugs

- Slow rendering for Mochi videos (5–10 minutes)

- Unpredictable distortions in complex image inputs

- Watermarks on free videos

- No support for sound or voice generation yet

Community Insight: Reddit & GitHub Feedback

From Reddit threads to GitHub issues, user consensus highlights:

“Genmo’s motion quality is unmatched for an open tool. But expect to iterate prompts.”

“Image-to-video is hit or miss—great when it works.”

“Brush tool needs polishing, but it’s way better than Kaiber for custom shots.”

Developers actively respond to GitHub issues and are receptive to Discord feature requests.

Genmo AI Pricing: What You Get

| Plan | Price | Credits/Month | Watermark | Commercial Use |

| Free | $0 | 50 + 200 bonus | Yes | Yes |

| Lite | $10 | 1,200 | No | No |

| Standard | $30 | 5,000 | No | No |

Replay videos cost ~10 credits; Mochi costs ~100. Paid plans unlock watermark-free HD rendering and higher priority.

Genmo AI vs Kaiber vs Runway ML: Which AI Video Tool Wins?

| Tool | Realism | Prompt Control | Open Source | Best For |

| Genmo AI | High | Advanced | Yes | Developers, researchers |

| Kaiber | Medium | Moderate | No | Creators, marketers |

| Runway ML | High | Low | No | Video editors, media teams |

Verdict: Genmo is unmatched in transparency and motion control.

Who Should NOT Use Genmo?

- Creators expecting instant, polished outputs without prompt tuning

- Users needing sound, voice, or storytelling support

- Beginners unfamiliar with generative art tools

Who Genmo Is Built For

- Developers who want to explore open models

- Educators looking for custom teaching visuals

- Artists & animators aiming to bring stills to life

- AI enthusiasts experimenting with prompt-based media

Genmo AI Review: Pros, Cons, and User Feedback

- High-quality motion with Mochi

- Fully open-source model for devs

- Advanced control over video generation

- Fair pricing with a useful free tier

- Active Discord & GitHub support

Cons

- Rendering delays (especially in the free tier)

- No audio or dialogue generation

- Steep prompt learning curve

- Inconsistent results for image-to-video

Final Word

Genmo AI is more than a tool—it’s an evolving platform built by and for the creative and developer community. If you like testing, fine-tuning, and open innovation, Genmo gives you one of the most powerful video generation environments available in 2025.

Explore. Prompt. Iterate. And build something visually stunning, one frame at a time.

Post Comment

Be the first to post comment!