On This Page

- "Limit Reached": When Premium Means Getting Less

- The Reddit Threads and GitHub Logs That Exposed It All

- The Invisible Downgrade: How Anthropic Masked the Change

- The $200 Frustration: Max Plan’s ROI Just Vanished

- GPU Load or User Lockout? The Real Reason Remains Hidden

- Trust Collapse: When Engineers Lose Faith in AI Tools

- The Apology That Didn't Explain Anything

- What Happens Now?

- Summary: Claude Max Isn’t Max Anymore

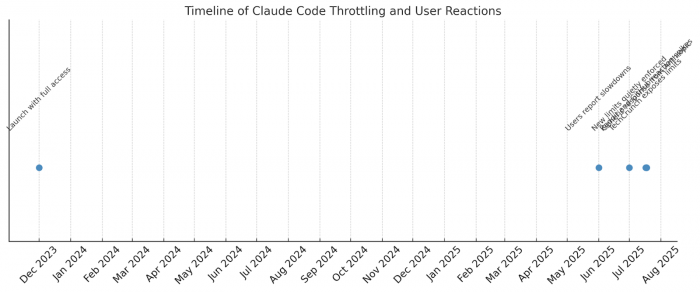

In what many users are calling a silent betrayal, Anthropic has quietly rolled back usage limits for Claude Code Max subscribers, leaving developers blocked mid-task with no warning. The $200/month power tier now appears throttled—without any email, notice, or changelog.

"Limit Reached": When Premium Means Getting Less

Until last week, subscribers to Claude Code Max believed they were buying stability: 20× more usage than Pro, designed for high-frequency development workflows.

But by mid-July 2025, many began encountering a system message:

"Claude usage limit reached. Try again at 7:43pm."

And that was it. No official update. No warning. Just silence.

Users report hitting usage ceilings within 30 minutes—a far cry from the multi-hour sessions the tier once enabled.

The Reddit Threads and GitHub Logs That Exposed It All

The tipping point came from user reports across Reddit, GitHub, and Discord. Developers, startup teams, and prompt engineers began noticing that their productivity suddenly nosedived.

"Used to get four hours of uninterrupted coding. Now? I'm rate-limited in under an hour."

—r/ClaudeAI

“There was no notice. Nothing. Just limits that kill your workflow and leave you guessing.”

—GitHub issue

Some even compared it to being rug-pulled by your own SaaS provider.

The Invisible Downgrade: How Anthropic Masked the Change

What’s most frustrating to users isn’t just the new limits—it’s the lack of transparency:

- No email

- No pricing page updates

- No in-app notification

- No API rate visibility

The Max plan still advertises high throughput. But in practice, requests are throttled or blocked outright without clarity on daily/hourly caps.

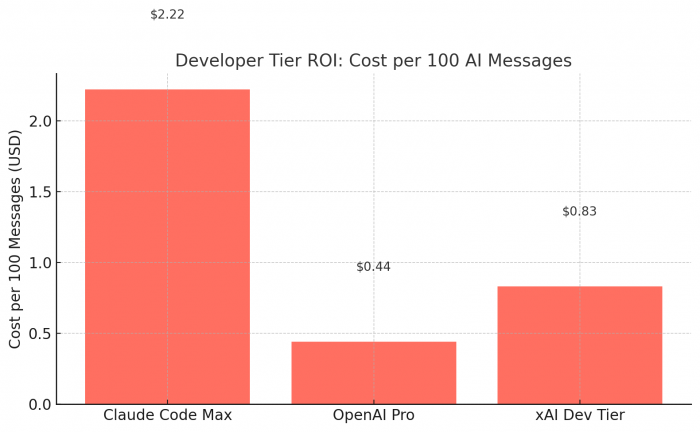

The $200 Frustration: Max Plan’s ROI Just Vanished

The Claude Code Max plan costs $200/month, originally marketed toward developers building tools, writing long sessions of code, and exploring AI-assisted automation at scale.

But for users:

- "20x usage" became a black box

- Value-for-money dropped overnight.

- Even base-tier users got better consistency.

As one Hacker News commenter put it:

“You’re effectively paying $6/day to be rate-limited worse than a free-tier OpenAI account.”

GPU Load or User Lockout? The Real Reason Remains Hidden

Speculation suggests Anthropic is struggling with GPU capacity or inference cost per user. Instead of raising prices or redesigning plans, it quietly cut access to reduce backend load—hoping few would notice.

But power users did notice. And they’re angry.

"Feels like bait and switch. Charge more if you must. Don’t ghost your paying customers."

Trust Collapse: When Engineers Lose Faith in AI Tools

Claude’s user base includes founders, researchers, AI engineers, and early adopters. These aren’t passive consumers—they’re platform amplifiers.

By introducing breaking changes without disclosure, Anthropic triggered:

- Broken automations

- Delayed delivery schedules

- Loss of developer trust

This incident has prompted some to cancel plans, others to migrate workflows to OpenAI, xAI, or Hugging Face.

The Apology That Didn't Explain Anything

When asked for comment, Anthropic offered a one-line response:

"We’re aware that some Claude Code users are experiencing slower response times, and we’re working to resolve these issues."

No mention of limits. No admission of changes. Just a soft deflection.

To many users, that silence speaks volumes.

What Happens Now?

Anthropic is at a crossroads:

- Reintroduce limits transparently with documented tiers

- Apologize publicly and restore prior access levels

- Or continue throttling and risk mass exodus of core users

If it chooses the third path, its most engaged tier may become its most alienated one

Summary: Claude Max Isn’t Max Anymore

- Usage caps were silently reduced for Claude Code Max users

- No communication was provided about the change

- Workflows are disrupted, especially for high-frequency developer

- Trust erosion is prompting users to explore other LLMs

- Anthropic has yet to clarify whether this change is temporary or permanent

Post Comment

Be the first to post comment!