At WWDC 2025, Apple did something uncharacteristic—it introduced artificial intelligence not with fireworks, but with finesse. “Apple Intelligence” (not to be confused with generative AI chatbots) isn’t about blowing minds; it’s about smoothing lives.

Instead of parading a Siri reboot, Apple wrapped intelligence into the core of its operating systems—iOS 18, macOS Sequoia, and watchOS 11—with understated, powerful tools like call summarization, automatic transcription, real-time translation, and image-aware Smart Replies. The delivery? Seamless, on-device, and grounded in privacy.

AI That Sees and Understands

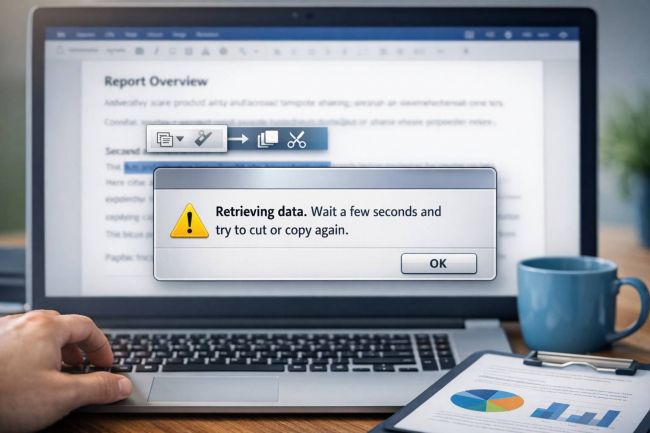

One of Apple’s most refined additions is Visual Intelligence. Rather than ask users to prompt a chatbot, Apple lets you interact with what’s already on your screen. Holding down a screenshot brings context-aware features: identify products, extract text, create events, or forward to ChatGPT for deeper insight—all without leaving the app.

Developers get access to Apple's Foundation Model API allows integration with just a few lines of Swift. It supports both on-device (3B parameter) and cloud-based models hosted by Apple, with explicit opt-in from users for any cloud use.

Siri Stays Behind (For Now)

Despite anticipation, Siri's long-overdue overhaul didn’t arrive. Apple admitted it’s not yet up to their speed or standards. While natural language improvements are planned, full conversational AI remains sidelined, unlike OpenAI's GPT-4o or Google Gemini, both of which dominate cross-platform tasks.

This soft rollout drew criticism. Analysts at Wedbush and Morgan Stanley called the keynote “underwhelming,” with Bloomberg (DA 94) reporting that investor reaction was lukewarm, and Apple stock dipped slightly post-event.

Privacy-First AI by Design

While competitors chase large-scale generative models, Apple focused on on-device inference—a strategic bet rooted in privacy. Even when using cloud-based AI, the system asks for user consent and keeps data compartmentalized, aligning with Apple’s long-standing reputation for user security.

Apple’s privacy approach aligns with its previous white papers and technical documentation found on Apple’s Developer Portal.

Catching Up to AI Giants?

Apple Intelligence doesn’t match the raw capability of GPT-4o or Gemini 1.5, especially in open-ended conversation or complex reasoning. Benchmarks suggest even mid-tier open-source models outperform Apple’s offering in generative quality.

Yet, Apple’s play isn’t about AI supremacy—it’s about control, usability, and invisibility. As The Verge (DA 93) noted, Apple’s AI isn’t flashy—it’s “ambient,” and that may prove more enduring.

What’s Launching and When?

- iOS 18 beta arrives in July 2025; full release with iPhone 16 in September

- Apple Intelligence will work only on devices with A17 Pro or M1 chips and above

- Siri upgrades and third-party app integration roll out later in 2025

- Tools like Image Playground, Genmoji, and smart proofreading are coming to iPhone, iPad, and Mac

Final Thoughts

Apple isn’t rushing to match AI titans in capability. Instead, it’s threading AI into the user experience in ways that feel native, controlled, and—most importantly—private. That restraint might look like a missed opportunity today. But with its deep ecosystem, Apple’s slow AI burn could prove the most sustainable fire of all.