On This Page

- Why data scraping matters for AI startups

- Legal and ethical considerations (don't skip this!)

- Method 1: Automated web scraping

- Method 2: API integration

- Method 3: Public datasets with a twist

- Method 4: Browser extensions and user consent

- Method 5: Social media mining

- Method 6: Form partnerships for data exchange

- Method 7: Create data magnets

- Common pitfalls to avoid

- Final thoughts

Ever wondered how AI startups seem to have endless supplies of data to train their models? You're not alone.

In the AI world, data is the new oil—except unlike oil, companies aren't limited to what's naturally in the ground. They're actively mining it from across the digital landscape, often through clever scraping techniques.

I've spent years working with AI startups and have watched them evolve from simple web scrapers to sophisticated data acquisition machines. The techniques I'll share today have helped numerous startups build robust datasets without breaking the bank (or the law).

Why data scraping matters for AI startups

AI models are like teenagers—they need to consume massive amounts of information before they can make intelligent decisions. But unlike human teenagers, AI systems can't learn just by existing in the world.

For startups, particularly those without Google-sized budgets, creative data acquisition strategies are essential. The most successful AI companies I've worked with don't just have better algorithms—they have better data collection methods.

Legal and ethical considerations (don't skip this!)

Before diving into the technical stuff, let's get something straight: not all data scraping is created equal from a legal perspective.

Here are some quick guidelines:

- Public data: Generally fair game, but check Terms of Service

- Rate limiting: Respect it or risk IP bans

- Personal data: Requires explicit consent in most jurisdictions

- Competitive intelligence: Legal gray area—proceed with caution

The scraping landscape changed dramatically after the hiQ Labs v. LinkedIn case, where the Supreme Court declined to hear LinkedIn's appeal against hiQ's scraping of public profiles. This essentially affirmed that scraping publicly available data isn't a violation of the Computer Fraud and Abuse Act.

That said, I'm not a lawyer (and if your startup is serious about data scraping, you should definitely consult one).

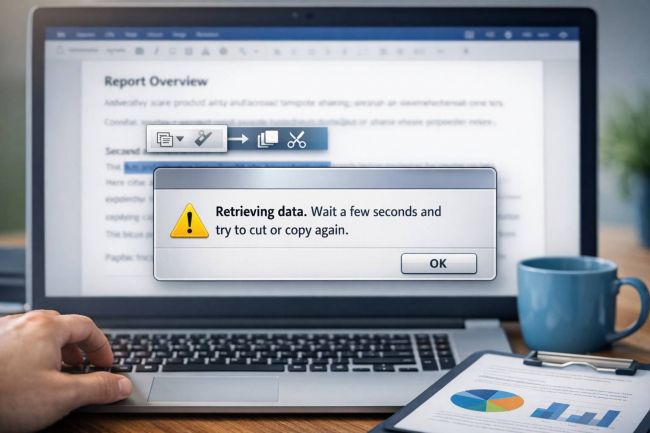

Method 1: Automated web scraping

This is the bread and butter of data acquisition for AI startups.

Here's how it typically works:

- Identify target websites with valuable data relevant to your AI model

- Build a crawler using tools like Scrapy, Beautiful Soup, or Selenium

- Implement proxy rotation to avoid IP bans

- Set up respectful crawling patterns (delays between requests, respect robots.txt)

- Extract structured data from the HTML

- Clean and normalize the gathered data

- Store in your database for training purposes

A simple Python example using Beautiful Soup might look like:

import requests

from bs4 import BeautifulSoup

import time

import random

user_agents = [

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/605.1.15',

# Add more user agents for rotation

]

def scrape_page(url):

headers = {'User-Agent': random.choice(user_agents)}

response = requests.get(url, headers=headers)

# Be respectful - don't hammer the server

time.sleep(random.uniform(1, 3))

if response.status_code == 200:

soup = BeautifulSoup(response.text, 'html.parser')

# Extract the data you need

data = soup.find_all('div', class_='your-target-class')

return data

return None

Pro tip: Always implement error handling and resumable scraping for large jobs. Nothing's worse than having your scraper crash at 95% completion with no way to resume.

Method 2: API integration

Why break into the house when you can walk through the front door?

Many services offer APIs that provide structured data access:

- Research available APIs in your domain

- Check rate limits and pricing (some are free up to certain quotas)

- Generate API keys and implement authentication

- Write connectors to periodically pull and store data

- Handle pagination and rate limiting in your code

Here's how you might set up a Twitter API connector:

import tweepy

import json

import time

# Set up authentication

auth = tweepy.OAuthHandler("CONSUMER_KEY", "CONSUMER_SECRET")

auth.set_access_token("ACCESS_TOKEN", "ACCESS_SECRET")

# Create API object

api = tweepy.API(auth, wait_on_rate_limit=True, wait_on_rate_limit_notify=True)

def collect_tweets(query, count=10000):

collected_tweets = []

for tweet in tweepy.Cursor(api.search, q=query, lang="en").items(count):

tweet_data = {

'text': tweet.text,

'created_at': str(tweet.created_at),

'user': tweet.user.screen_name,

'followers': tweet.user.followers_count

}

collected_tweets.append(tweet_data)

# Save periodically in case of failure

if len(collected_tweets) % 1000 == 0:

with open(f'{query}_tweets_{len(collected_tweets)}.json', 'w') as f:

json.dump(collected_tweets, f)

return collected_tweets

The beauty of APIs is that they give you clean, structured data without the messy extraction step.

Method 3: Public datasets with a twist

Not everything needs to be scraped from scratch.

There are treasure troves of public datasets available:

- Kaggle Datasets: Over 50,000 public datasets

- Google Dataset Search: Indexes datasets across the web

- Government Open Data: Census, weather, economic data

- Academic Repositories: Research data across disciplines

But here's the twist successful AI startups employ: they don't just use these datasets as-is. They:

- Combine multiple sources to create unique training sets

- Augment existing data with additional features

- Clean and normalize more thoroughly than others

- Create synthetic examples based on patterns in the data

This approach gives them proprietary datasets without starting from zero.

Method 4: Browser extensions and user consent

Some clever AI startups have created browser extensions that:

- Provide genuine value to users (price comparison, readability tools, etc.)

- Request explicit consent to collect anonymized browsing data

- Capture relevant information as users browse the web

- Send data back to central servers for processing

This method has two massive advantages:

- It's usually compliant with data protection laws when done right

- It gives you access to data behind login walls (with user consent)

Honey, the shopping assistant (acquired by PayPal for $4 billion) used this approach to gather pricing data across the web.

Method 5: Social media mining

Social platforms are goldmines of behavioral, preference, and language data:

- Set up listening tools for relevant keywords and hashtags

- Collect public posts, comments, and interactions

- Analyze sentiment, topics, and trends

- Build user preference models

By integrating these social listening practices, you can gain a deeper understanding of your audience, enabling more informed decision-making and enhanced engagement strategies.

For a more detailed guide on actionable visual marketing tactics, check out this article.

Method 6: Form partnerships for data exchange

Sometimes the direct approach works best:

- Identify companies with complementary data needs

- Propose mutual value exchanges (not just asking for data)

- Establish clear data-sharing agreements

- Implement secure data transfer mechanisms

I've seen startups successfully partner with:

- Retail businesses need to analyze customer behavior

- Content publishers to improve recommendation systems

- Service providers to enhance predictive maintenance

Method 7: Create data magnets

The most sustainable approach? Create something valuable that naturally generates the data you need:

- Build a free tool that solves a genuine problem

- Make data sharing optional, but beneficial to users

- Be transparent about how you'll use the data

- Deliver ongoing value to ensure continued usage

Grammarly is a perfect example—they offer writing assistance while collecting billions of writing samples that help improve their AI.

Common pitfalls to avoid

Even the best AI startups make these mistakes:

- Ignoring data quality: Quantity isn't everything; garbage in, garbage out

- Poor data storage practices: Unstructured hodgepodge becomes unusable

- Overlooking compliance: GDPR, CCPA, and other regulations have teeth

- Single-source dependency: What happens when your main data source changes its policy?

- Scraping without consideration: Being aggressive with scraping can get your IPs banned

Final thoughts

Data acquisition is often the overlooked secret sauce of successful AI startups. The algorithms might get all the glory, but without quality data, they're just fancy math with nothing to calculate.

The most ethical and sustainable approach combines several of these methods such as scraping with proxies that are unbanned, like those offered by https://roundproxies.com/datacenter-proxy/, with a heavy emphasis on providing value in exchange for data. The days of sneaky, under-the-radar scraping are numbered as regulations tighten.

What are your experiences with data acquisition for AI? Have you tried any of these methods? Let me know in the comments!

Post Comment

Be the first to post comment!